|

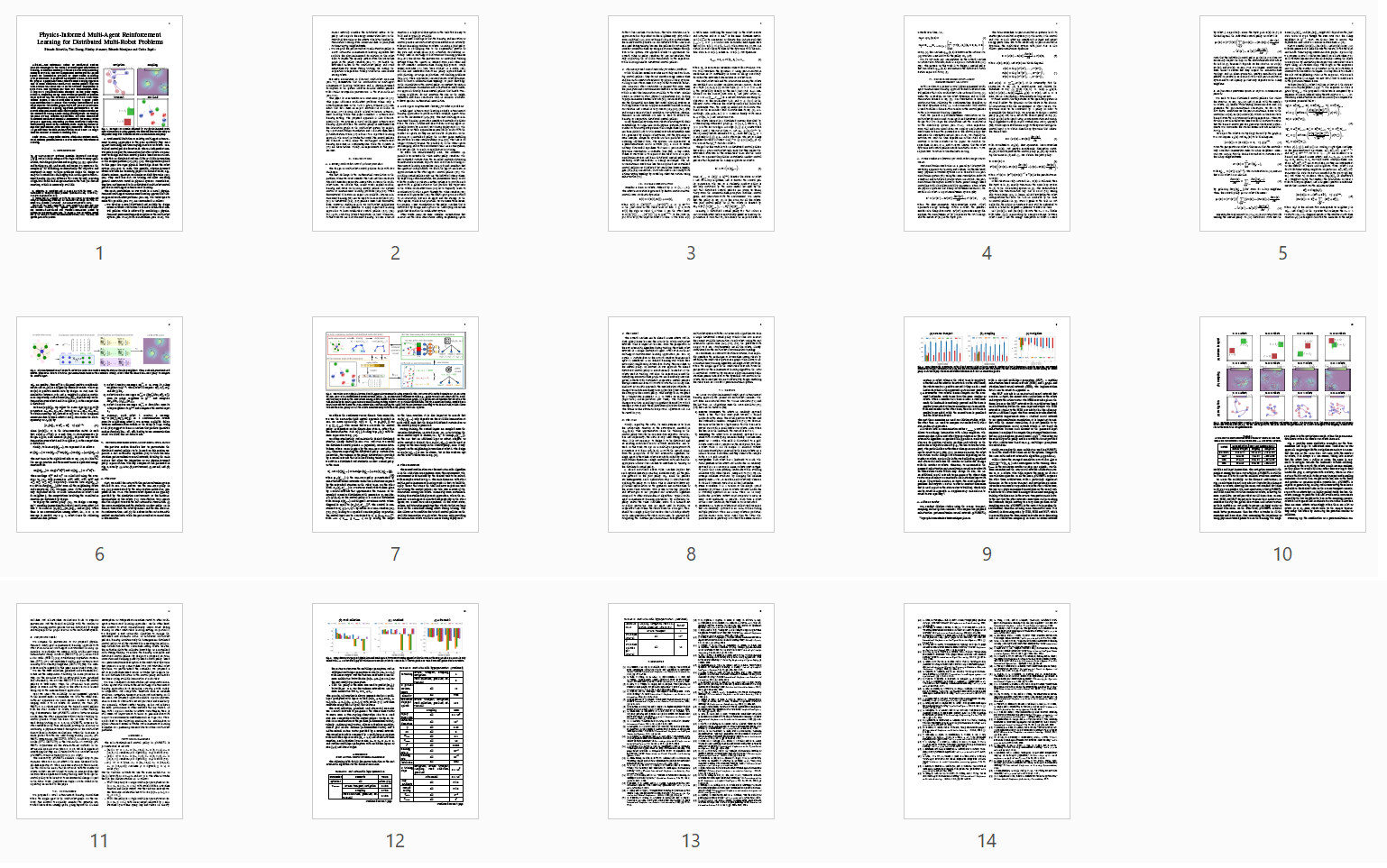

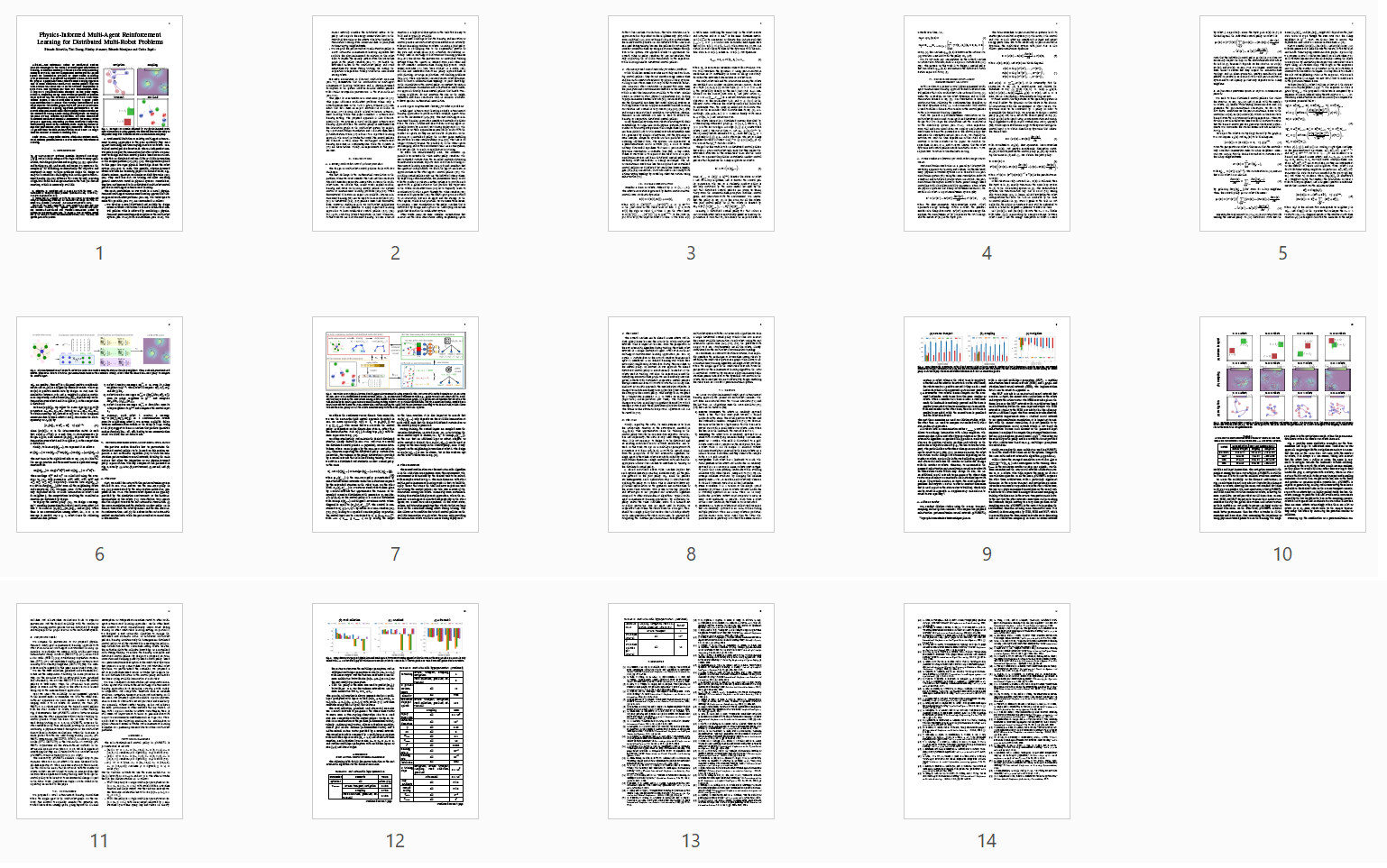

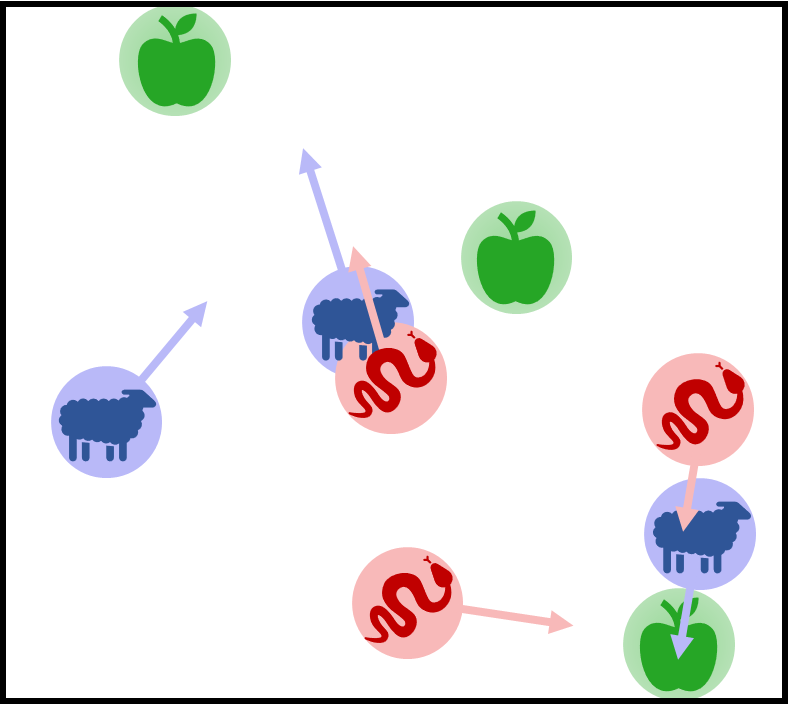

Examples of scenarios addressed by our physics-informed multi-agent reinforcement learning approach. The scenarios cover a wide variety of cooperative/competitive behaviors and levels of coordination complexity. From left to right: navigation, informative sampling, cooperative transport, food collection with predators' evading.

|

|

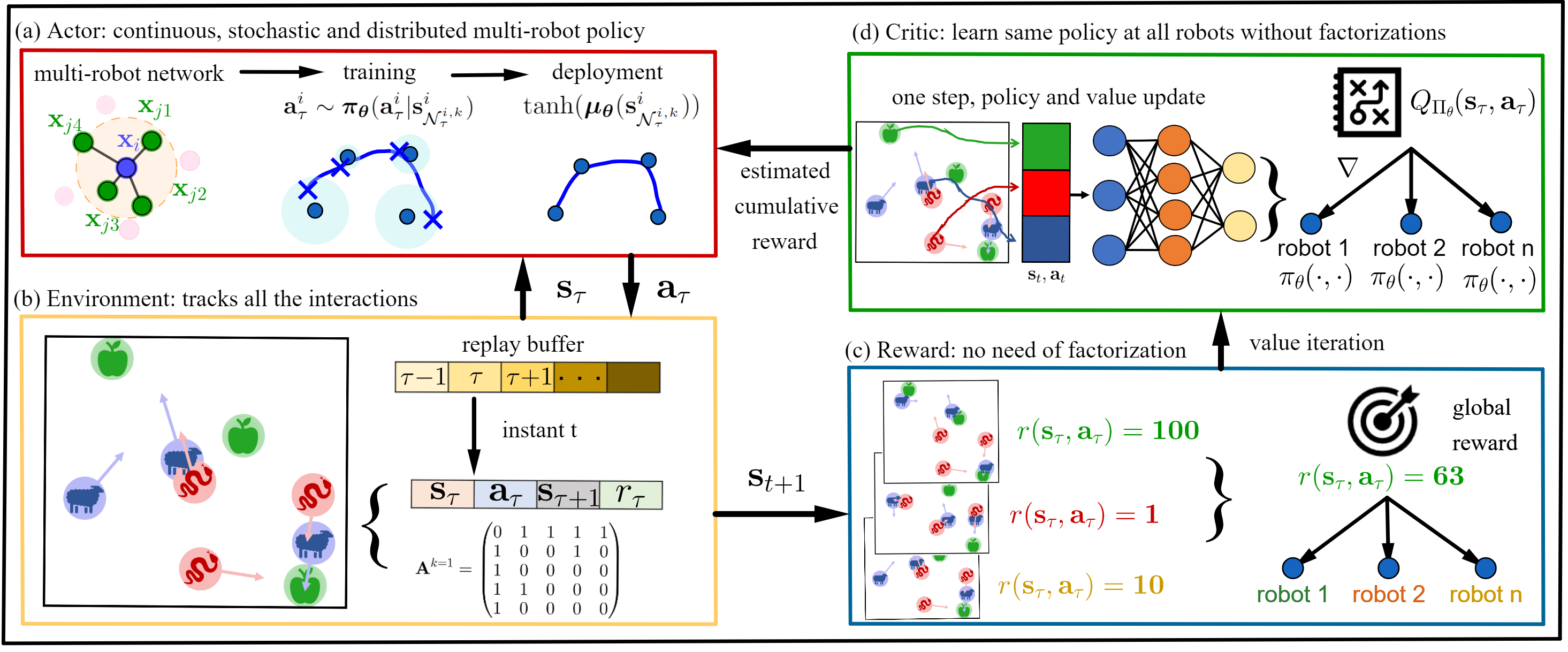

Overview of our physics-informed multi-agent reinforcement learning approach. The soft actor-critic method consists of: (a) an actor, the IDA-PBC self-attention-based neural network policy, (b) an interactive environment with, e.g., goals to reach and adversaries to avoid, and that keeps track of the correlations among robots encoded in the communication graph, (c) a global reward function that describes the task and steers the multi-agent reinforcement learning training without any particular factorization, and (d) a centralized critic, modeled as a neural network that learns the state-action value function relating the agent and the environment according to the desired task, which allows to learn the same policy for all the robots simultaneously with the same policy and value updates.

|

|

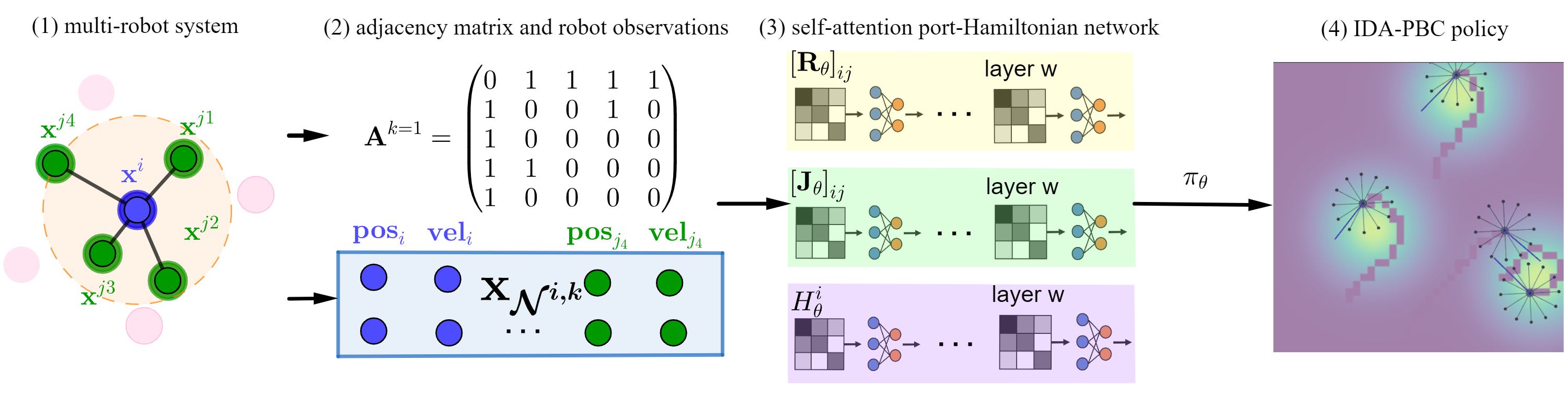

Physics-informed neural network. Robot i receives information from the state of its k-hop neighbors. Then, each self-attention-based module process the data to obtain the port-Hamiltonian terms of the controller. Finally, robot i uses the learned IDA-PBC policy to compute the control input.

|

|

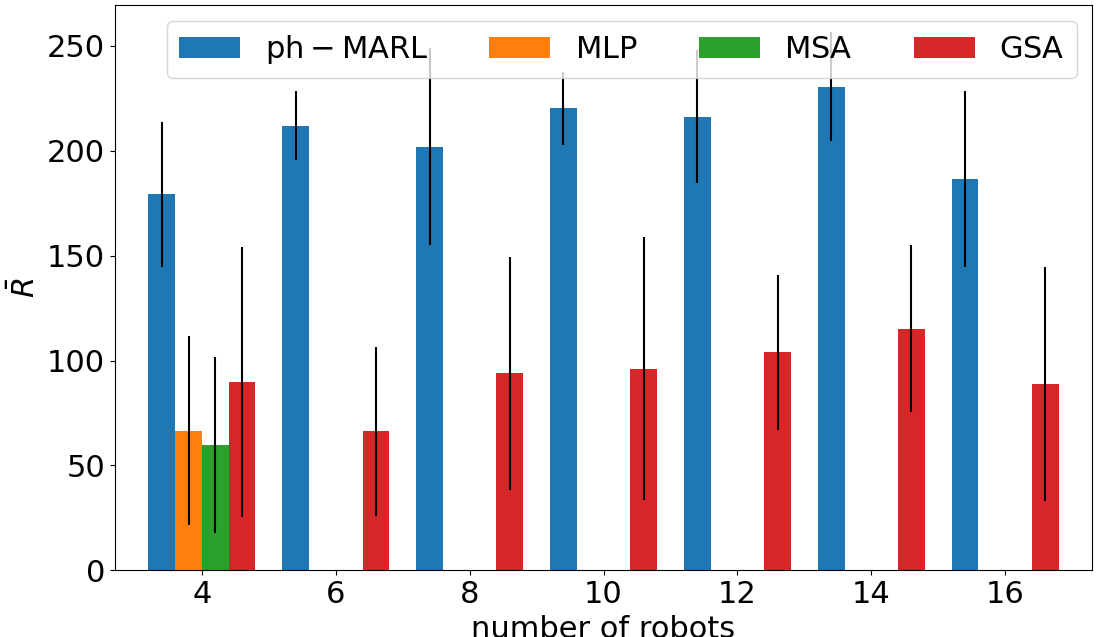

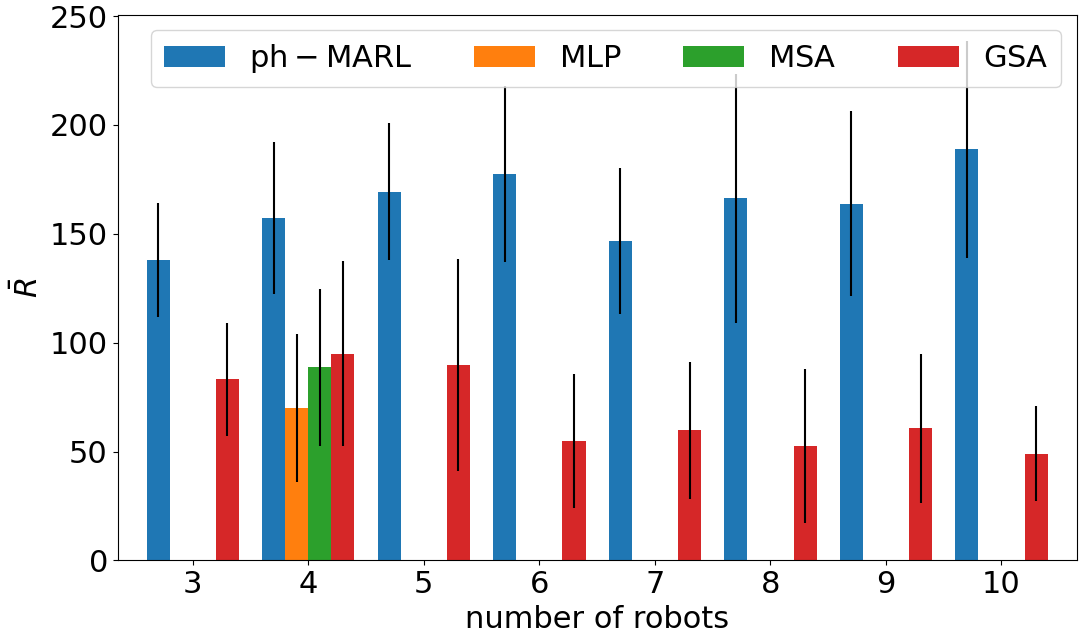

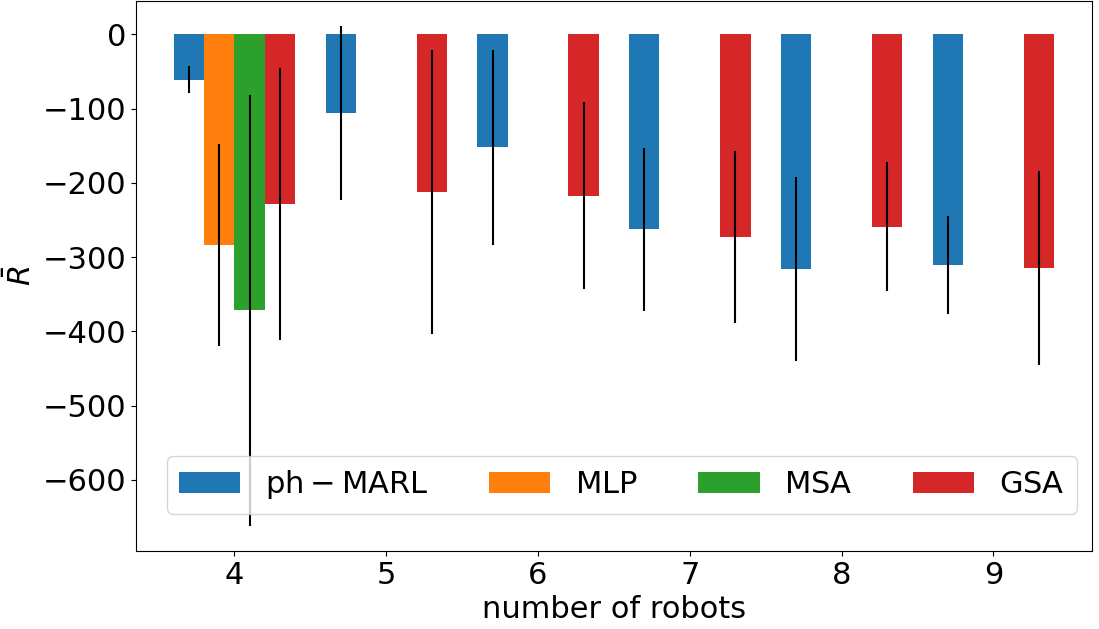

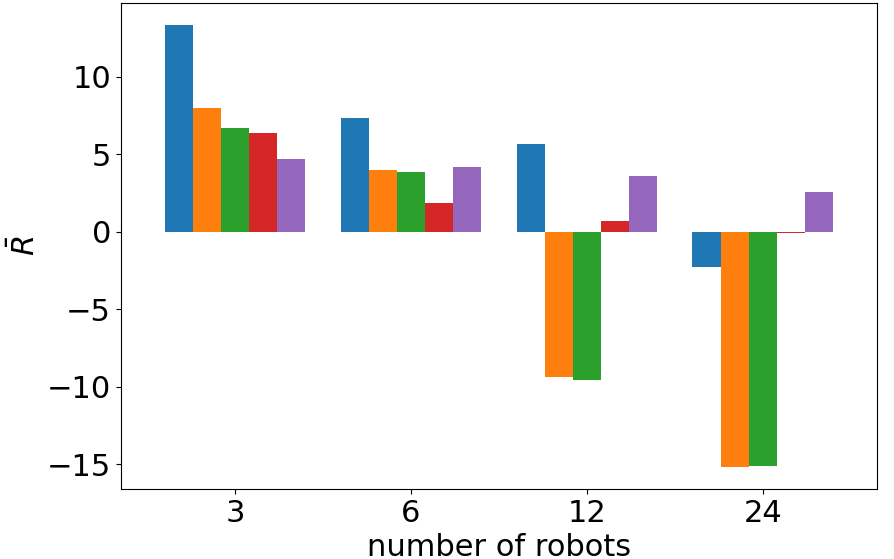

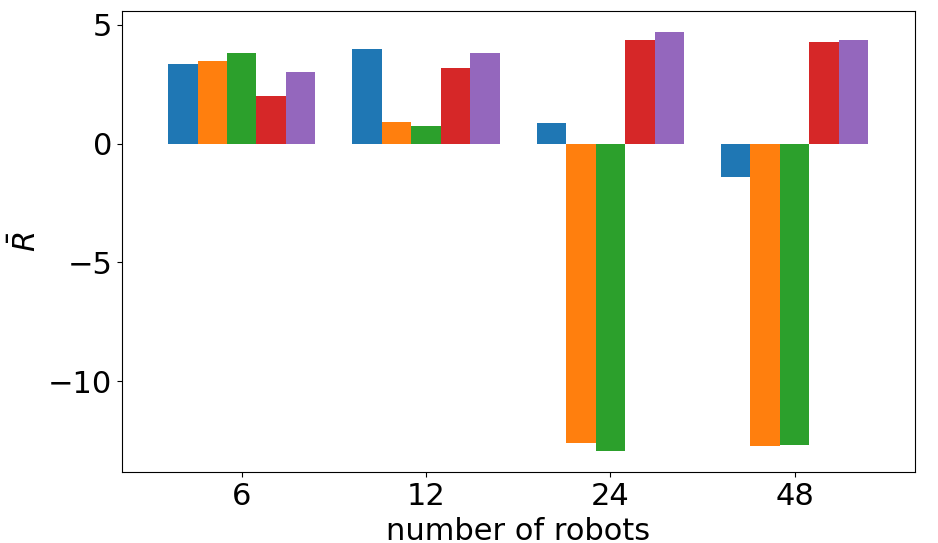

Comparison of the performance of the ablated control policies when we scale the number of robots in deployment. In all the scenarios, our proposed combination of a port-Hamiltonian modeling and self-attention-based neural networks achieves the best cumulative reward without further training the control policy. Each bar displays the mean and standard deviation of the average cumulative reward over 10 evaluation episodes.

|

|

|

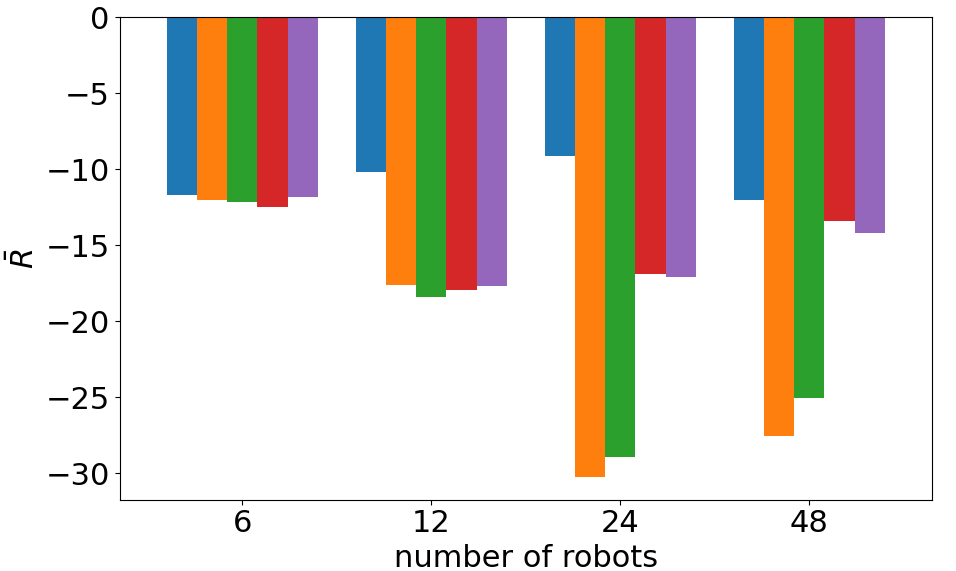

Comparison of our proposed physics-informed multi-agent reinforcement learning approach with other state-of-the-art approaches. pH-MARL is only trained with 4 robots and deployed with different team sizes, while the state-of-the-art control policies are trained for each specific number of robots.

|

Some Qualitative Results

|

Examples of multi-robot scenarios for different initial conditions and number of robots. It is interesting to see that some aspects of the environment do not scale with the team size, e.g., the size and weight of the box in the reverse transport scenario, the number of hot spots in the sampling scenario or the size of the arena in the navigation scenario. From top to bottom: reverse transport, navigation, sampling, food collection, grassland, adversarial.

|

|

|

|

|

|

|

Code

Citation

If you find our papers/code useful for your research, please cite our work as follows.

E. Sebastian, T. Duong, N. Atanasov, E. Montijano, C. Sagues. Physics-Informed Multi-Agent Reinforcement Learning for Distributed Multi-Robot Problems. Under review at IEEE Transactions on Robotics, 2024.

@article{sebastian24phMARL,

author = {Eduardo Sebasti\'{a}n AND Thai Duong AND Nikolay Atanasov AND Eduardo Montijano AND Carlos Sag\"{u}\'{e}s},

title = {{Physics-Informed Multi-Agent Reinforcement Learning for Distributed Multi-Robot Problems}},

journal = {arXiv preprint arXiv:2401.00212},

year = {2024}

}

Acknowledgements

This work has been supported by ONR N00014-23-1-2353 and NSF CCF-2112665 (TILOS), by Spanish projects PID2021-125514NB-I00, PID2021-124137OB-I00 and TED2021-130224B-I00 funded by MCIN/AEI/10.13039/501100011033, by ERDF A way of making Europe and by the European Union NextGenerationEU/PRTR, DGA T45-23R, and Spanish grant FPU19-05700.

|